Walkero

software engineer, Drupal follower, Docker ninja, JS explorer, PHP believer, exotic Hardware beta tester, Amiga enthusiast, truth seeker, parent & husband... at least for now...

I am sure I am not the first to say that I noticed a tremendous traffic increase to my websites and web applications for the last year and maybe more, where the 90% is coming from scanner bots and LLMs agents. Every day, I listen to people fighting with them and fighting to keep their websites alive, while keeping the costs as low as possible. Because it is true, that the impact these scanning bots have is not only a lot of traffic on your server, but also sometimes they are so aggressive, where they look like DDoS attack.

I had a similar situation last week when my Git server started being scanned ferociously from many different IPs coming from all over the world. I like to use Gitea as my personal GIT server because it gives me some freedom on how I deal with my code and which repos I want to keep private and which being public. I use Docker to run it and I have Traefik as a reverse proxy that deals with all the requests and the SSL certificates.

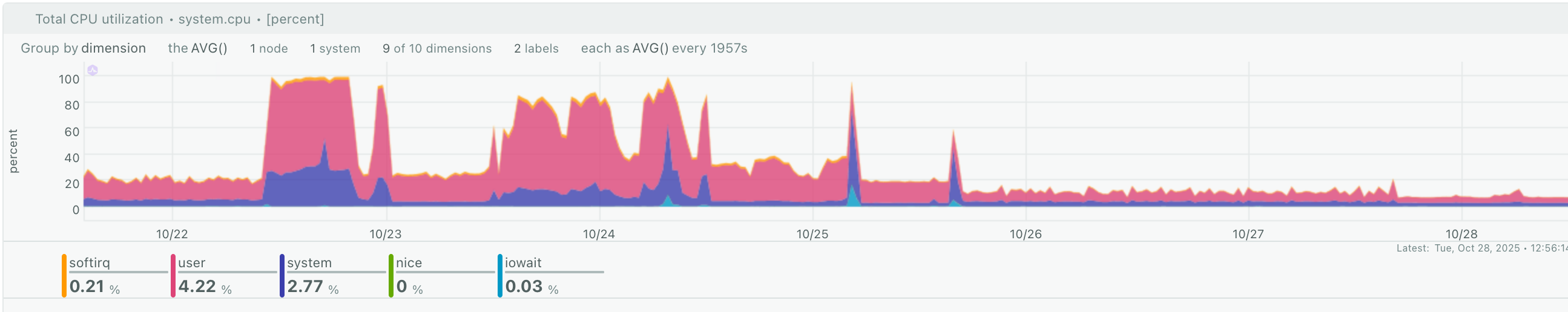

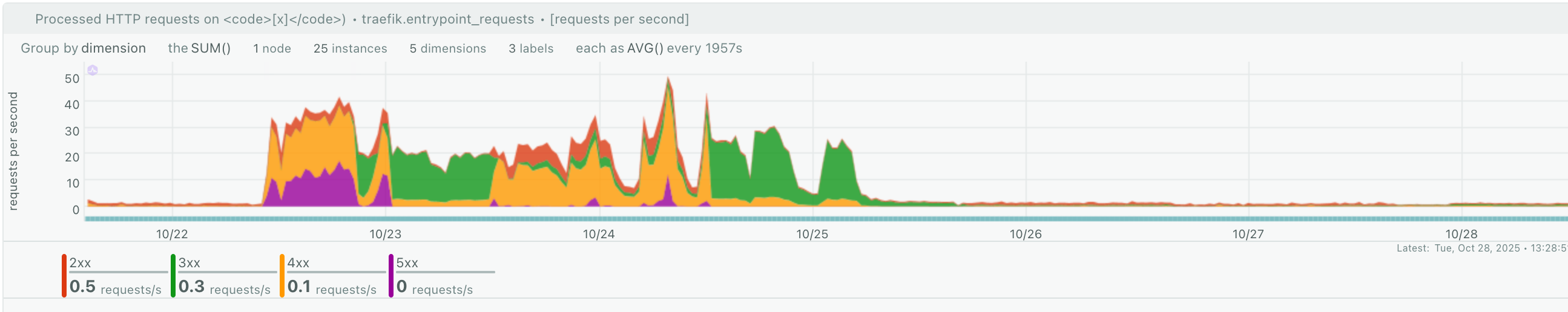

So, one day last week I got messages from my Uptime Kuma server that my Git server was down, which I confirmed trying to access it. Checking the server information through my Netdata Cloud, which the monitoring tool I love to use, I noticed that the whole cloud server had 100% CPU usage and the Traefik proxy was responding mainly with 400 and 500 errors.

Logging in my server, I recognised that the container that was getting the majority of the requests was the Gitea one, and stopping it was enough to have the CPU drop to 10%. Which was a good proof that the Gitea server of mine was under "attack".

Restarted it and started looking the traffic, which as I previously described was coming from IPs from all over the world, which is not something easy to block with a firewall. And, the requests did not have some specific agent in the header that could be blocked from the robots.txt, if they respect that (which most bots do not). So I thought to find a smarter way to block them. Something that could be done at the point where the request first comes into my server, and that is Traefik.

So, after this long introduction, let me tell you how I managed to resolve that problem. Traefik has the ability to use plugins, which can be installed as add-ons. I found the Captcha Protect and the Bot Wrangler plugin, which I decided to use, after testing others as well. To install them I added the following in the Traefik Static Configuration.

experimental:

plugins:

wrangler:

moduleName: github.com/holysoles/bot-wrangler-traefik-plugin

version: v0.6.0

captcha-protect:

moduleName: github.com/libops/captcha-protect

version: v1.9.4Find more information how to install Traefik plugins at https://plugins.traefik.io/install.

I had to restart Traefik to have those plugins installed and ready to be used. This change though is not applied automatically, as there is a need to update each projects' Traefik labels that need to use them. So, I edited the docker-compose.yml file for my Gitea installation and put there the following labels:

labels:

# Declaring the middleware chain:

- "traefik.http.routers.gitea.middlewares=gitea-filtered"

- "traefik.http.middlewares.gitea-filtered.chain.middlewares=bot-wrangler,captcha-protect"

- "traefik.http.middlewares.bot-wrangler.plugin.wrangler.loglevel=ERROR"

- "traefik.http.middlewares.bot-wrangler.plugin.wrangler.botAction=BLOCK"

- "traefik.http.middlewares.captcha-protect.plugin.captcha-protect.rateLimit=40"

- "traefik.http.middlewares.captcha-protect.plugin.captcha-protect.ipv4subnetMask=16"

- "traefik.http.middlewares.captcha-protect.plugin.captcha-protect.window=86400"

- "traefik.http.middlewares.captcha-protect.plugin.captcha-protect.protectRoutes=/"

- "traefik.http.middlewares.captcha-protect.plugin.captcha-protect.captchaProvider=hcaptcha"

- "traefik.http.middlewares.captcha-protect.plugin.captcha-protect.siteKey=<SITE_KEY>"

- "traefik.http.middlewares.captcha-protect.plugin.captcha-protect.secretKey=<SECRET_KEY>"

- "traefik.http.middlewares.captcha-protect.plugin.captcha-protect.persistentStateFile=/tmp/state.json"

- "traefik.http.middlewares.captcha-protect.plugin.captcha-protect.exemptIps=1.2.3.4/24"

- "traefik.http.middlewares.captcha-protect.plugin.captcha-protect.enableStatsPage=false"

- "traefik.http.middlewares.captcha-protect.plugin.captcha-protect.loglevel=ERROR"For the captcha I used the hCaptcha as I already had an account there, where I added the Gitea website in the list. Got the Site and the Secret keys which I used in the labels are shown above. I also added my home IP in the exemptIps just to avoid having myself blocked. Finally, I rebuilt the Gitea container so that the changes be applied.

With these changes, the multiple requests from different IPs are requested to pass a captcha test, which they fail. And if they are known bad bots, Bot Wrangler will handle them. So, the offenders get an error in response, but more crucially, they never reach my Gitea installation and no extra requests to the database are made. This way the CPU usage drops dramatically and the offending IPs start slowly to be blocked.

The result can be seen below, but they may vary for you. The first screenshot shows the server CPU usage before and during the attack, but also after I applied the changes described above.

And the following screenshot shows Traefik behaviour at the same time period, and the responses it provided.

Have in mind that the attack might need a few days to stop, if it ever, but the result will be your website to remain alive and public, where the information will be available only to humans, who need to use it. Because, after all, that's the purpose of sharing your work online. To help humans to solve issues and collaborate with others for a better result.